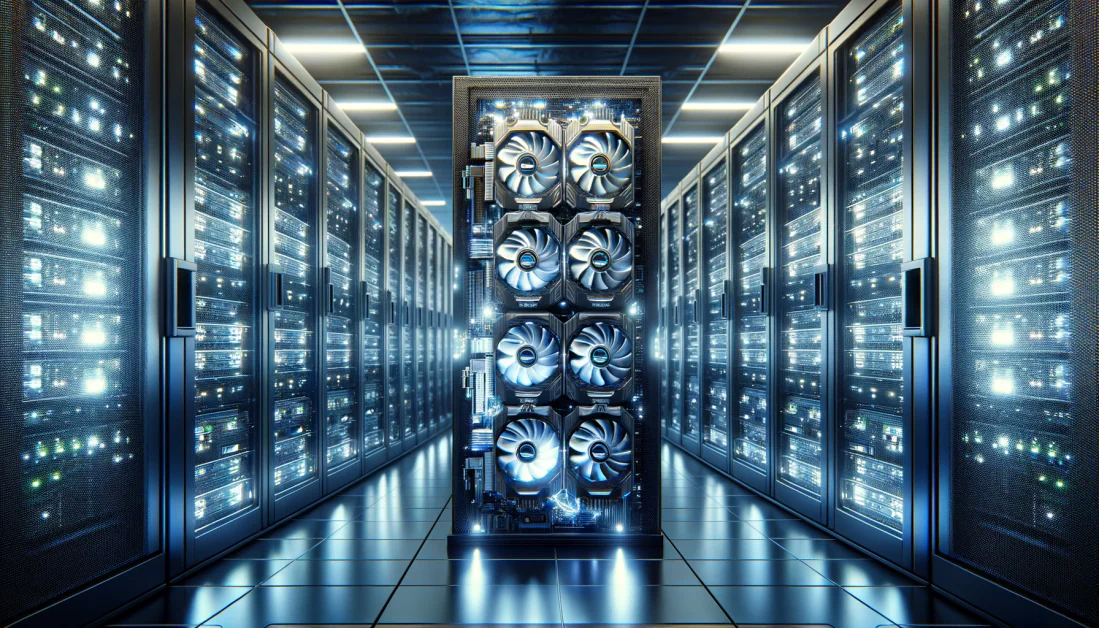

As artificial intelligence, data science, and real-time rendering become increasingly central to modern development, more developers are turning to GPU-powered hosting solutions. GPUs (Graphics Processing Units) offer massive parallel processing capabilities, enabling faster execution for compute-heavy tasks like machine learning, deep learning, and high-definition video rendering.

But for developers just starting out—or even seasoned engineers at lean startups—the key challenge lies in finding a powerful yet affordable GPU server. That’s where knowing the best cheap GPU server providers, along with the right toolsets, can make all the difference.

In this guide, we’ll explore which languages, frameworks, and platforms align best with GPU hosting—and how developers can maximize performance without burning their budget.

Why Developers Need GPU Hosting

Developers today are often tasked with building more resource-intensive applications than ever before. Whether it’s computer vision, generative AI, or scientific modeling, these workflows are not suited for standard CPU hosting. That’s because CPUs process tasks sequentially, while GPUs can execute thousands of operations simultaneously.

Here’s where GPU hosting comes in:

-

Speed: Training models in hours instead of days

-

Efficiency: Offloading heavy computations without taxing local machines

-

Scalability: Running large parallel processes or batch jobs effortlessly

-

Cost Control: On-demand access without upfront investment in hardware

And with the rise of best cheap GPU server providers, developers now have the freedom to choose powerful infrastructure without the enterprise price tag.

Top Frameworks Optimized for GPU Hosting

Not all frameworks are created equal—some are specifically designed to take advantage of GPU acceleration. Here are a few key ones every developer should know:

1. TensorFlow

A leading ML framework developed by Google, TensorFlow supports both training and inference on GPUs via CUDA. It works seamlessly on many cloud GPU instances.

2. PyTorch

Favored in the research community for its flexibility, PyTorch also supports GPU-accelerated computing. It offers dynamic computation graphs and is great for NLP and computer vision projects.

3. CUDA Toolkit

Developed by NVIDIA, CUDA is the go-to tool for developers who want fine-grained control over their GPU computing environment. It works with C, C++, and Python.

4. OpenCV

Widely used for computer vision tasks, OpenCV supports GPU acceleration via CUDA for real-time image processing, face detection, and more.

5. Keras

A high-level neural network API that runs on top of TensorFlow, Keras makes it easy to build and train deep learning models with minimal code—fully GPU-compatible.

Programming Languages That Play Well with GPUs

While most modern languages can interact with GPU-accelerated libraries, some are especially well-suited for GPU workflows:

-

Python: By far the most popular language for AI and ML development, Python supports GPU usage via libraries like NumPy, TensorFlow, and PyTorch.

-

C++: Offers high performance and direct integration with CUDA for low-level GPU computing.

-

R: Preferred in statistical computing and data science, R has packages that utilize GPU resources for matrix operations and deep learning.

-

JavaScript (via TensorFlow.js): Useful for building GPU-accelerated web apps and running ML models in-browser.

Best Cheap GPU Server Providers for Developers

Here are some of the best cheap GPU server providers known for affordability, flexibility, and developer-friendliness:

| Provider | Key Features | Ideal For |

|---|---|---|

| HelloServer.tech | Affordable GPU plans, customizable servers, optimized for AI/ML | Startups, solo developers, game dev |

| Paperspace | Developer-focused GPU cloud, Jupyter notebooks, low-cost hourly rates | AI, prototyping, visual tasks |

| Vast.ai | Decentralized GPU marketplace with dynamic pricing | Cost-sensitive users and testers |

| Lambda Labs | Premium GPU servers with hourly and reserved pricing | AI research, ML training |

| RunPod | Budget-friendly GPU nodes with pre-configured ML environments | Data scientists and app developers |

Choosing the right provider depends on your exact use case—whether you need a full bare-metal server, shared access, or a containerized runtime.

Tips for Getting the Most Out of Your GPU Hosting Plan

-

Match GPU specs with workload

Don’t overpay for top-tier GPUs like the A100 if a T4 or P100 can handle your task. -

Use spot or preemptible instances

Some providers offer these at reduced prices if you don’t mind occasional interruptions. -

Leverage prebuilt environments

Choose images with ML tools already installed (e.g., Jupyter + CUDA + PyTorch) to save time. -

Monitor and optimize usage

Use tools like Prometheus, nvidia-smi, or provider dashboards to track resource utilization.

Final Thoughts

For developers, GPUs are no longer just a luxury—they’re a necessity for building fast, intelligent, and scalable software. Whether you’re training AI models, processing video streams, or developing 3D visualizations, GPU hosting gives you the horsepower to execute high-performance workloads in the cloud.

The best part? You don’t have to break the bank. With the rise of best cheap GPU server providers, even individual developers can tap into enterprise-grade power without massive upfront costs.

As long as you choose the right framework, the right provider, and the right tools for your language of choice, GPU hosting will help you code smarter, deploy faster, and deliver better results—no lag included.